2.0 Release

See CHANGELOG.md for details. 1x Speed unless otherwise noted.

New Capabilities

Cartwheel: Toddy successfully performs a highly dynamic cartwheel, showcasing its agility and balance capabilities.

Cartwheel Failures: With naive DeepMimic and domain randomization, the success rate is still low. But the good news is that Toddy is extremely robust—it almost never breaks. Even if it breaks, it is super easy to fix.

Crawling: Toddy can crawl like a toddler, using its arms and legs in a coordinated manner.

Walking Faster: Toddy can walk faster in all directions (up to 0.25m/s) and rotate faster in place (up to 1 rad/s).

VR Teleoperation: Real-time VR teleoperation with Meta quest 2.

Foundation Stereo Depth On-board (Jetson Orin NX 16GB) real-time (10Hz) depth estimation from stereo fisheye cameras with Foundation Stereo.

Design

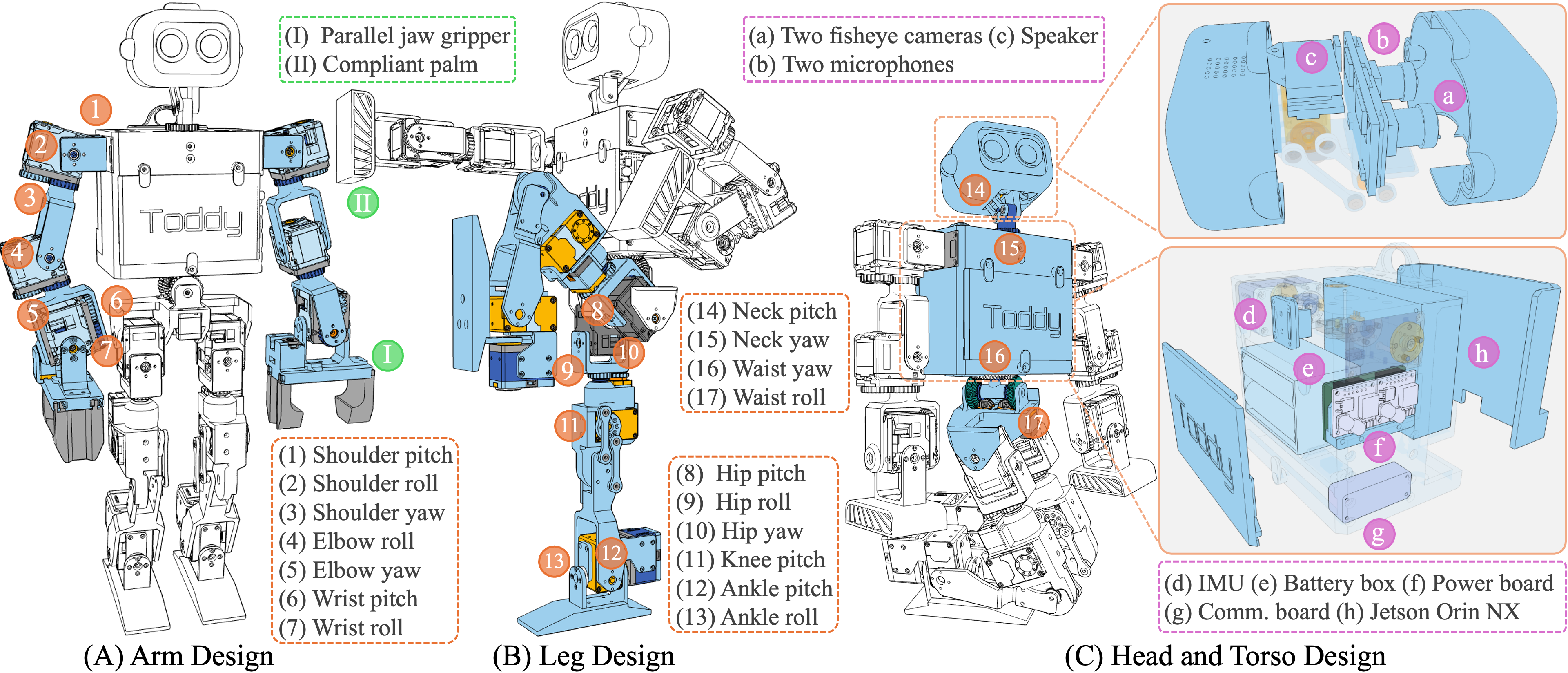

We present ToddleBot's mechatronic design in the figure below. We highlight ToddlerBot's 30 active DoFs with orange markers: 7 DoFs per arm, 6 DoFs per leg, a 2-DoF neck, and a 2-DoF waist. Green markers indicate two end-effector designs—a compliant palm and a parallel-jaw gripper. Purple markers denote the sensor and electronics layout with exploded views, featuring two fisheye cameras, a speaker, two microphones, an IMU, and a Jetson Orin NX computer.

Results

1x Speed unless otherwise noted.

Capability: Arm Span, Payload, and Endurance

Arm Span Test: With a torso volume of 13x9x12 cm³, ToddlerBot successfully grasps objects 14 times its torso volume (27x24x31 cm³) using its compliant palm gripper.

Payload Test: ToddlerBot lifts 1,484 g (40% of its total weight) while maintaining balance. To determine the limit, screws are incrementally added to a 3D-printed gripping cup until it falls.

Endurance Test: Running a walking RL policy, ToddlerBot lasts 19 minutes before overheating affects stability. It withstands up to 7 falls before breaking, but repairs take only 21 minutes of 3D printing and 14 minutes of assembly for full restoration.

Capability: Conversation, Push-ups, and Pull-ups

Conversation and Push-ups: This is achieved by integrating OpenAI's Realtime API with GPT-4o for speech-to-text and text-to-speech. Push-ups are zero-shot sim-to-real transfer of open-loop keyframe animation.

Pull-ups: We use an AprilTag to help ToddlerBot accurately locate the horizontal bar. The rest is a zero-shot sim-to-real transfer of open-loop keyframe animation.

ML-Compatibility: Locomotion and Manipulation

Omnidirectional Walking: ToddlerBot achieves omnidirectional walking with RL and zero-shot sim-to-real.

Bimanual Manipulation: This is an RGB-based diffusion policy trained with 60 demonstrations.

Full-body Manipulation: This is also an RGB-based diffusion policy trained with 60 demonstrations.

Skill Chaining: ToddlerBot first executes a diffusion policy to grasp the handle, while maintaining that pose, switched to the RL policy to push the wagon forward.

Reproducibility: Hardware and Policies

Manipulation Policy Zero-Shot Transfer: We successfully transfer manipulation policy trained with data collected on Toddlerbot instance to another.

Two-Instance Collaboration Task: To demonstrate their equivalent performance of two Toddlerbot Instances, both robots collaborate on a long-horizon room tidying task.

Easy-to-Build Test: We show that ToddlerBot is easy to build with fully open-source assembly manuals and videos.